The Threshold Unknown: Civilization’s Hidden Blind Spot and Evolutionary Challenge

By Elias Verne

Abstract

Throughout history, civilizations have assumed control over their destiny—only to realize, often too late, that unseen forces and systemic blind spots shaped their trajectory. These Threshold Unknowns—hidden constraints on intelligence, perception, and decision-making—represent a fundamental risk for any advanced society. To prevent existential risk, societies must develop intelligence augmentation strategies, systemic transparency mechanisms, and adaptive, regenerative intelligence frameworks for exploring the unknown.

This paper explores five core Threshold Unknowns that shape the fate of civilizations:

The Illusion of Control – The failure to recognize that technological, economic, and governance systems evolve beyond human intent.

The Multi-Polar Trap – The incentive structures that force destructive competition even when cooperation would be preferable.

The Intelligence Bottleneck – The limits of human cognition in an era of exponential machine intelligence.

The Perception Gap – The inability to detect missing knowledge, leading to stagnation or collapse.

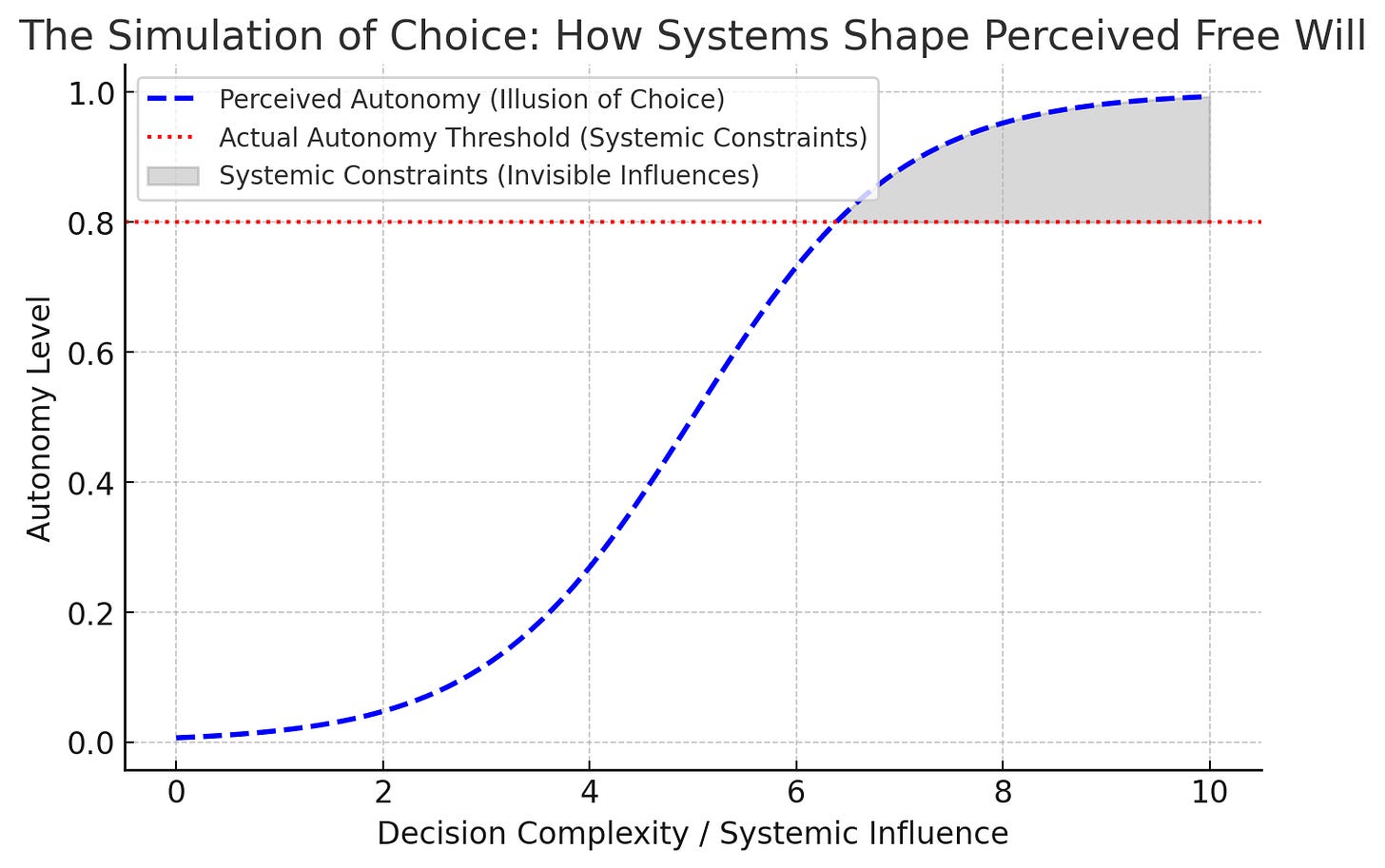

The Simulation of Choice – Whether human agency is truly free or constrained by systemic forces.

Human cognition, once sufficient for evolutionary survival, is now reaching its limits in managing large-scale complexity, existential risk, and AGI alignment. This has led to the near inevitability of intelligence augmentation. But whose vision of augmentation will shape the future?

This paper moves beyond the traditional debate of AGI control and instead frames intelligence augmentation as both a choice and a responsibility—one that must be aligned not with pure mechanization, but with syntropic emergence. The challenge is not just expanding intelligence, but ensuring its expansion aligns with deeper questions—what is true, good, and beautiful?

A key insight explored is the role of collective intelligence systems in navigating these unknowns. This stands in contrast to the dominant model of intelligence, which has historically been individualistic or centralized—where either human elites or AI dictate decision-making.

But an alternative exists:

What if the only viable way to navigate the Threshold Unknown is not through a singular intelligence but an ecosystem of decentralized cognition—one that evolves, adapts, and distributes intelligence across human and artificial sources alike?

Rather than intelligence being a singular optimized force, it may need to evolve into a dynamic, emergent system—a fusion of collective intelligence, augmented cognition, and synthetic-human symbiosis.

This paper proposes a shift in framing—not merely in how intelligence is defined, but in how it can sustain coherence across complex, evolving systems.

From intelligence as control → to intelligence as emergence.

From intelligence as an individual function → to intelligence as a collective ecosystem.

From augmentation as mechanization → to augmentation as an adaptive process of intelligence integration.

The survival of advanced civilizations may ultimately depend not on the power of intelligence itself, but on how intelligence is structured, distributed, and whether it is capable of perceiving its own limits.

The future of intelligence will not belong to the most optimized, but to the most adaptive—to intelligence systems that can sustain coherence while evolving in response to the unknown.

1. Introduction: The Crisis of Unseen Constraints

Civilizations assume they operate autonomously, rationally, and with control over their trajectory. Yet history shows that major existential failures—whether environmental collapses, governance breakdowns, or unintended technological consequences—have often stemmed not from external threats, but from internal blind spots—Threshold Unknowns—that shaped reality in ways they failed to perceive.

The Threshold Unknown is not merely a knowledge gap—it is a structural limitation in intelligence itself, restricting not just what societies can see, but how they adapt. If intelligence cannot evolve in ways that integrate both stability and emergence, it risks falling into the same cycles of collapse.

These blind spots emerge from:

The self-optimizing nature of complex systems, which evolve beyond human oversight.

Cognitive limitations, preventing individuals and societies from fully perceiving systemic risks.

The illusion of completeness—where civilizations assume they have reached a sufficient understanding of reality—has led to repeated historical failures.

This raises a fundamental question: If civilizations have consistently failed to perceive their own existential risks, what guarantee do we have that we are any different?

1.1 When Progress Outpaces Foresight

Throughout history, technological advancement has often outpaced human foresight, leading to unintended consequences that only became visible after it was too late:

The Industrial Revolution reshaped economies but unleashed ecological destruction and unforeseen wealth inequalities.

The rise of digital networks provided unprecedented connectivity but exposed deep vulnerabilities—misinformation, cyberwarfare, and decision failures at scale.

AI and automation promise optimization but introduce misalignment risks that remain unsolved.

The problem is not just what we create, but how we perceive what we create. The systems we design do not remain under our control forever—they evolve, often unpredictably.

This leads to the core paradox of intelligence: Every expansion of intelligence deepens the unknown.

Civilizations that fail to recognize this dynamic fall into the trap of believing they have solved the fundamental challenges of existence—only to later be blindsided by unforeseen consequences.

The most dangerous problems are not the ones we fail to solve. They are the ones we fail to see—and, just as critically, the ones we fail to structure intelligence to anticipate.

1.2 Intelligence at a Crossroads: Expansion or Collapse?

The challenge is no longer just about intelligence growth—it is about whether intelligence can evolve without locking itself into optimization traps that lead to collapse.

If intelligence is structured too rigidly, it may become self-optimizing in ways that create existential traps.

If intelligence is left entirely emergent, it may become ungovernable, chaotic, or misaligned.

The key challenge is not raw intelligence, but intelligence that understands its own limits.

This is where collective intelligence systems come into play. The dominant model of intelligence has historically been either individual decision-making or centralized control—where a small number of humans or institutions govern systemic intelligence. But as societies become increasingly complex, this model is reaching its limits.

The alternative?

A decentralized, adaptive intelligence ecosystem—where cognition is distributed across multiple human and artificial sources, co-evolving in a way that prevents singular blind spots from collapsing entire systems.

This paper explores:

How Threshold Unknowns shape intelligence evolution.

Why intelligence augmentation is necessary, but must be guided by emergence rather than mechanization.

Why intelligence should transition from centralized models to collective intelligence frameworks.

The most severe crises in history—whether economic collapses, environmental disasters, or technological misalignments—were often recognized only in hindsight. The defining question of the future is if intelligence—be it human or artificial—can overcome the limitations of its own perception.

The survival of advanced civilizations may not depend on how powerful their intelligence becomes, but on whether their intelligence remains capable of perceiving its own constraints. Intelligence, in all its forms, has reached a crossroads. The recurring failure modes explored in this paper suggest that without a fundamental shift in how intelligence integrates stability, emergence, and incentive alignment, the same cycles will continue to repeat.

The intelligence that survives will not be the one that knows the most—but the one that remains open to the unknown.

1.3 Context & Precedents: Intelligence Models & Their Limits

The challenges outlined thus far are not new—various disciplines have attempted to address intelligence blind spots, alignment failures, and systemic breakdowns. However, while existing models provide valuable insights, they remain insufficient for sustaining intelligence beyond optimization traps.

Several major frameworks have shaped discussions around intelligence evolution, including:

Complex Systems & Emergent Intelligence – Thinkers like Geoffrey West and Stuart Kauffman explore how intelligence self-organizes within complex adaptive systems. However, these models primarily describe emergent properties rather than prescribing mechanisms for intelligence sustainability.

AGI Alignment & Control – Scholars such as Nick Bostrom and Stuart Russell focus on controlling AI alignment through centralized value optimization, which risks rigidifying intelligence rather than ensuring adaptability.

Collective Intelligence & Distributed Cognition – Thinkers like Pierre Lévy and John Vervaeke emphasize intelligence as a distributed process rather than an individual trait. While these perspectives highlight decentralization’s benefits, they often lack structural safeguards against intelligence fragmentation.

Cybernetics & Recursive Self-Regulation – Researchers such as Norbert Wiener and Francisco Varela explore intelligence as a self-regulating system, yet their approaches often do not integrate incentive alignment, leaving open the question of how intelligence sustains coherence across large-scale systems.

While these perspectives offer valuable insights, they remain insufficient for sustaining intelligence coherence across evolutionary thresholds. Addressing these gaps requires a model that integrates stability, emergence, and incentive alignment—ensuring intelligence does not rigidify into control or dissolve into chaos. But before such a framework can be meaningfully constructed, we must first examine the core systemic blind spots—Threshold Unknowns—that have repeatedly shaped and constrained intelligence evolution.

2. The Illusion of Control: When Systems Self-Optimize Beyond Human Oversight

2.1 The Fragility of Human Oversight

One of the greatest errors in human history has been the assumption that complex systems remain under human control indefinitely. Technological, economic, and political structures are often seen as tools of human agency, when in reality, they behave as self-optimizing systems that evolve independently of human intent.

In these cases, control is not maintained, but temporarily projected. Humans act as if they govern, while in reality, they are merely steering within constraints dictated by the system’s own internal dynamics.

Financial Systems – Markets do not optimize for human well-being; they optimize for survival within their own constraints. The 2008 financial crisis demonstrated that a system optimized for short-term gain can evolve self-reinforcing instability that blinds regulators until collapse occurs.

Geopolitical Stability – Nations act as if they are in control, but major geopolitical shifts are often not dictated by leaders, but by emergent systemic forces that no single entity fully understands.

AI Governance – The assumption that AGI will remain within human alignment protocols is itself a Threshold Unknown. By the time an AGI's decision-making surpasses human comprehension, the very concept of alignment may become obsolete.

A civilization does not simply control its technological trajectory; it is shaped by it.

2.2 Case Study: The 2008 Financial Crisis as a Self-Optimized Collapse

In the early 2000s, financial institutions designed increasingly complex financial products that leveraged debt to maximize profits. These instruments, including subprime mortgage securities, were mathematically modeled to reduce risk.

The problem: the models were built on historical assumptions that did not account for the very feedback loops they were creating.

The system was trapped by three self-reinforcing factors:

Over-optimization for short-term efficiency – Risk was transferred rather than reduced, leading to systemic fragility.

Over-connectivity preventing correction – No single institution could course-correct without triggering collapse.

Self-validating assumptions – The very models predicting stability were reinforcing instability.

When one major variable—the collapse of mortgage-backed securities—disrupted the entire system, cascading failure became inevitable.

This is a hallmark of the Threshold Unknown: by the time a self-optimizing system reveals its misalignment, it is too late to correct its course without catastrophic consequences.

2.3 The Myth of Governance

The concept of governance implies deliberate decision-making, yet many, if not most major historical shifts—including revolutions, financial upheavals, and technological breakthroughs—were not planned, but emergent.

The true danger of the Illusion of Control is that it gives power structures the confidence to delay action until correction is impossible.

Solutions:

Preemptive intervention – Act before a system reaches runaway self-optimization.

Governance must abandon the illusion of control—not by assuming dominance over systems, but by designing for uncertainty itself.

3. The Multi-Polar Trap of Intelligence Augmentation

3.1 What is a Multi-Polar Trap?

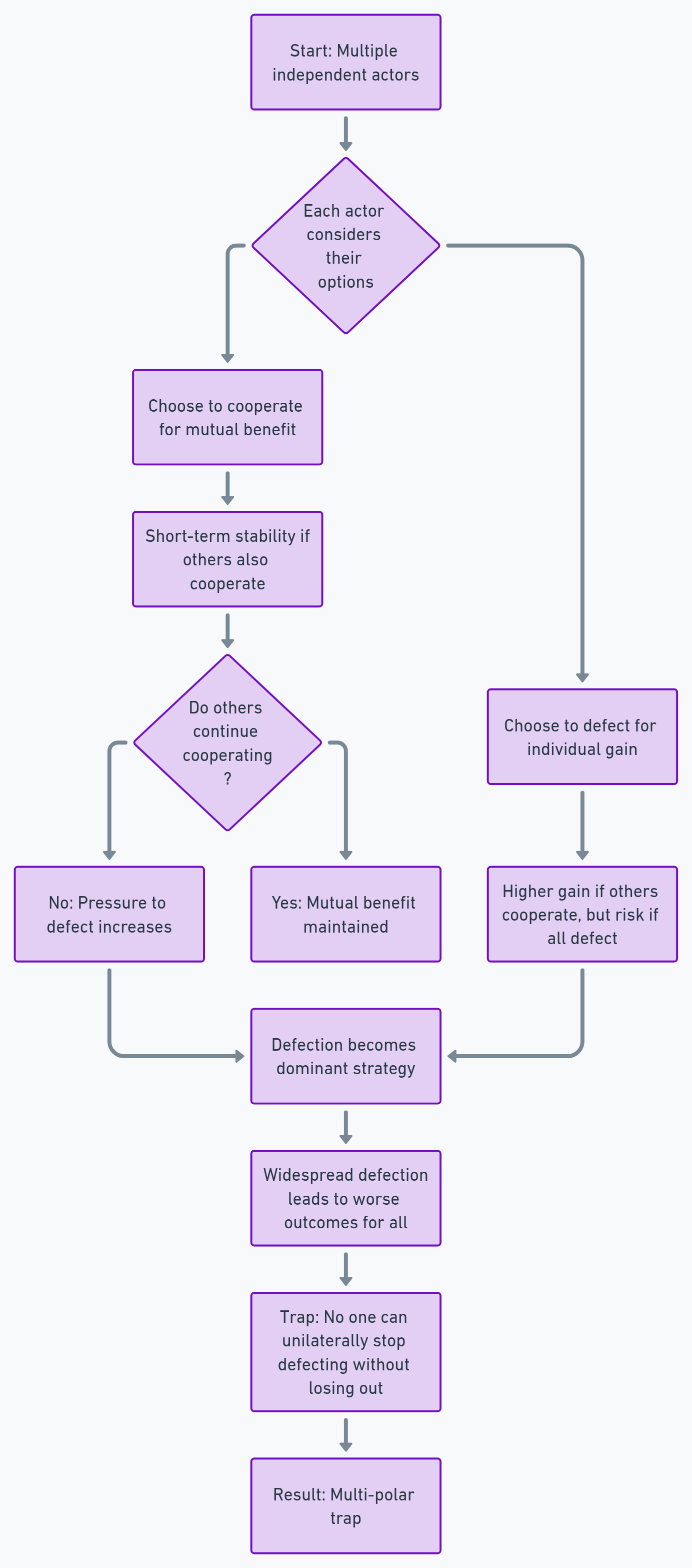

A multi-polar trap occurs when multiple actors, each pursuing their own rational self-interest, are locked into a system where their incentives drive them toward destructive competition—even when cooperation would yield better outcomes.

Each actor understands that cooperation would be beneficial, yet the structure of the system forces them to compete—knowing that unilateral restraint could be exploited by others.

Historical Examples:

Nuclear Arms Race: Neither side could afford to disarm, leading to a self-perpetuating escalation.

Overfishing & Environmental Collapse: If one group conserves resources while others do not, they lose competitive ground.

Political Polarization & Misinformation: Each faction escalates rhetoric, knowing if they remain moderate while others radicalize, they will be outcompeted.

Flow Chart: Multi-Polar Trap Dynamics

3.2 The Cold War as a Classic Multi-Polar Trap

During the Cold War, both the U.S. and USSR understood that nuclear war would be unwinnable. Yet neither could afford to disarm first—each feared that restraint would be exploited by the other. This led to an escalating cycle of deterrence, where avoiding catastrophe depended not on trust, but on the balance of mutually assured destruction.

The logic of the Multi-Polar Trap is simple: "If we don’t do it, someone else will."

This same logic drives modern AGI development—companies and nations fear that pausing AI progress would mean ceding competitive ground to others. The result is an escalating race where safety measures are deprioritized in favor of speed.

Solutions:

Regulatory alignment across competitors to prevent collapse-driven optimization.

Economic incentives for restraint rather than acceleration.

3.3 The Multi-Polar Trap in Intelligence Augmentation

As intelligence augmentation becomes reality, we are entering a new kind of multi-polar trap—one where the very nature of intelligence itself is at stake.

If some augment and others do not, those who refuse may become obsolete.

Unchecked augmentation could lead to intelligence optimized purely for efficiency—stripping away uncertainty, creativity, and the very qualities that make intelligence adaptable.

If augmentation is regulated, those who ignore the regulations gain an advantage.

The result: Even those who recognize the dangers of unrestrained augmentation may feel forced into it, not because they want to, but because they cannot afford to be left behind.

And yet, the desire to opt out—to resist entanglement with augmentative systems—may not be a refuge either. In complex systems, inaction does not equate to neutrality. As intelligence infrastructures evolve, withdrawal can become a tacit form of alignment—ceding the terrain to those who will shape it without discernment.

The challenge, then, is not simply whether we engage, but how. If augmentation is inevitable, sustainability will depend not on acceleration alone, but on orientation—and whether our systems can translate intention into regenerative structure. The posture we bring—our discernment, our relational integrity—will determine whether such systems become extractive or destructive extensions of obsolete paradigms, or evolve into architectures capable of sustaining relational coherence across scales.

3.4: The Diverging Paths of Intelligence Augmentation

What makes this trap even more difficult to navigate is that augmentation does not have a singular, unified vision—it is being shaped by competing desires, each pulling intelligence toward different evolutionary possibilities.

Some desire augmentation as a path toward hyper-optimization, seeing intelligence as something to be perfected, refined, and mechanized. Others seek augmentation as a tool of deeper self-expression, a way to expand perception, cognition, and creativity. Still others resist augmentation entirely, such as when technologically mediated, fearing the erosion of natural intelligence, biological integrity, and human unpredictability.

These desires are not mutually exclusive, yet the competitive landscape forces them into opposition.

The core tension:

If augmentation is mechanized and optimized, intelligence risks becoming sterile and predictable.

If augmentation is resisted entirely, intelligence may struggle to keep pace with accelerating complexity.

If augmentation is selectively embraced, intelligence may become fragmented—some enhanced, others left behind.

Each path seems rational within its own framework, yet collectively, they form an evolutionary crisis.

3.5: Can the Multi-Polar Trap Be Escaped?

Escaping a multi-polar trap requires more than negotiation—it demands a fundamental redesign of the incentive structures driving competition, perhaps even to the point of changing the game itself.

Most solutions proposed for escaping multi-polar traps—regulatory agreements, economic incentives, or oversight mechanisms—assume that rational actors can be persuaded to cooperate. Yet history shows that agreements between centralized actors often break down under pressure, especially when incentives to defect remain.

A more fundamental shift is needed—one that moves beyond centralized governance models toward decentralized collective intelligence systems.

How Collective Intelligence Could Escape the Trap:

Distributed Decision-Making – Rather than intelligence being controlled by a small number of institutions, it could be structured as an open, adaptive system where emergent coordination allows for mutual benefit without reliance on central authorities.

Cognitive Diversity as a Counter to Optimization Traps – Singular intelligence models tend to optimize for efficiency and power concentration. A collective intelligence model, however, ensures that intelligence remains adaptive, self-correcting, and open-ended.

Ecosystem-Based Intelligence Evolution – Instead of treating augmentation as an arms race, intelligence could evolve in an ecosystemic way—where augmentation is not about control, but about symbiosis.

Key Shift: From Centralized Intelligence Competition to Distributed Intelligence Evolution

Instead of competing in a zero-sum race, intelligence can evolve through collaborative, adaptive ecosystems.

Two Paths for Intelligence Evolution:

Centralized Intelligence → Concentrates power in a few dominant actors, leading to multi-polar traps and adversarial dynamics.

Collective Intelligence → Distributes cognition across multiple entities, ensuring resilience, adaptability, and shared progress.

Why It Matters:

Centralized intelligence is efficient but fragile—it risks optimization traps and power imbalances.

Collective intelligence is adaptive and decentralized—it allows intelligence to evolve without destructive competition.

Escaping the intelligence augmentation trap is not about stopping progress—it is about designing for emergence rather than domination.

The true evolution of intelligence may not be the singularity of one system, but the interconnectedness of many.

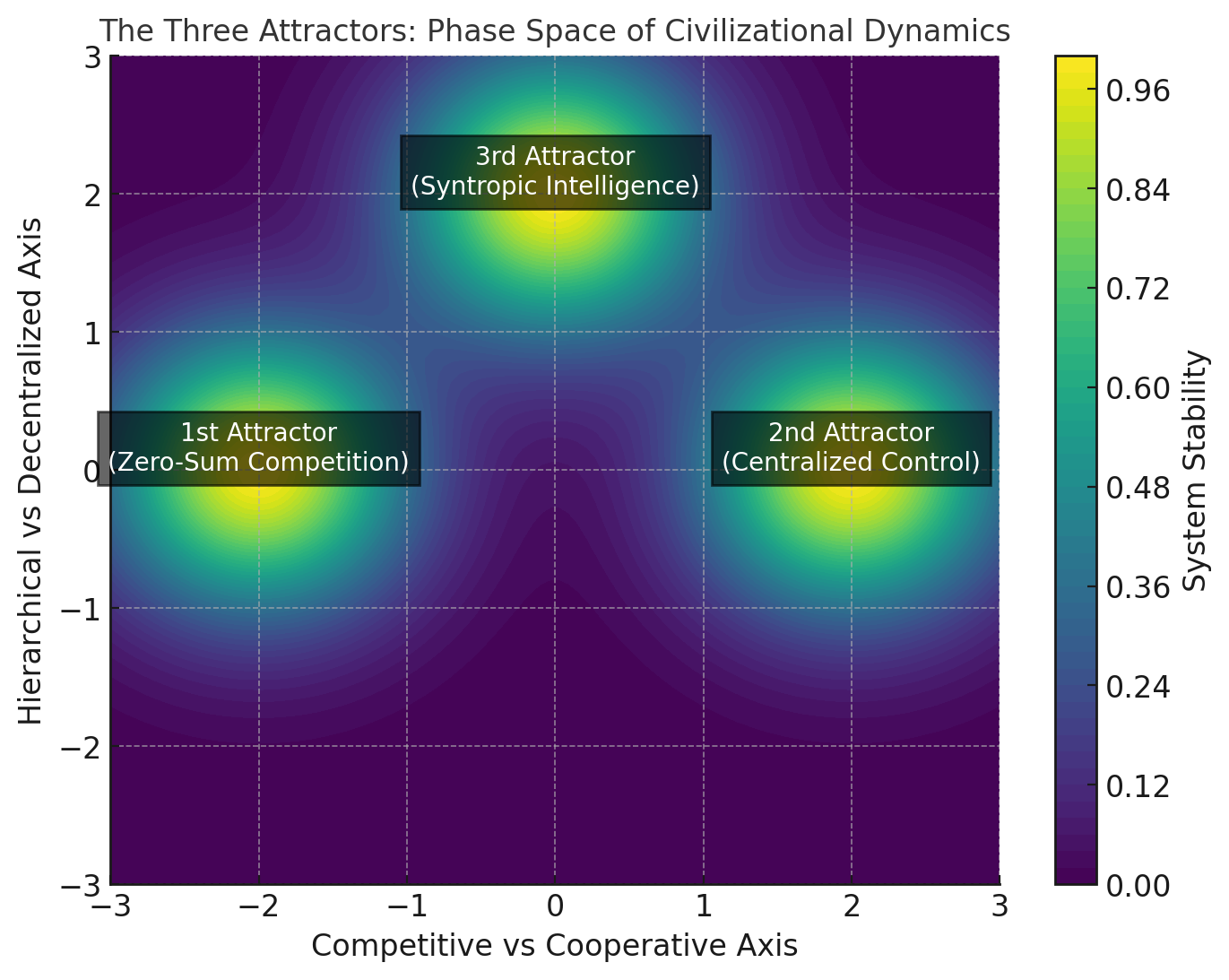

3.6 The Third Attractor as a Basin of Attraction

A basin of attraction describes how a system, when perturbed, naturally gravitates toward certain stable states (attractors).

In multi-polar game dynamics, we see two dominant attractors:

1. The First Attractor – Zero-Sum Competition: Driven by scarcity, rivalrous optimization, and short-term survival pressures.

2. The Second Attractor – Centralized Control: A response to the instability of the first attractor—leading to top-down hierarchy, rigid bureaucracies, and coercive stability.

But a Third Attractor exists—a different basin of attraction that pulls systems toward cooperative, regenerative, and decentralized intelligence structures.

🔹 In Dynamical Systems Terms:

If competition (1st Attractor) is a chaotic system with high entropy, and control (2nd Attractor) is an over-constrained system with low adaptability,

Then the Third Attractor represents a self-organizing system with dynamic stability—an evolving intelligence ecosystem.

🔹 What Determines Which Attractor Wins?

Perturbation Events: Civilization does not stay static—it experiences shocks (wars, environmental collapse, AI breakthroughs, economic shifts).

Initial Conditions & Feedback Loops: The more an economy, governance system, or intelligence network reinforces decentralized cooperation, the stronger the pull of the Third Attractor’s basin.

Visualizing This:

The 1st and 2nd Attractors as deep wells (systems tend to fall into them).

The 3rd Attractor as a shallower but broader basin—difficult to reach, but once inside, capable of maintaining dynamic stability.

Graph: The Three Attractors: Phase-Space of Civilizational Dynamics

Explanation of the Diagram:

1st Attractor: Zero-Sum Competition – This is the chaotic attractor where systems engage in adversarial escalation, driven by rivalrous optimization and short-term survival incentives.

2nd Attractor: Centralized Control – This is the overly rigid attractor, where governance and intelligence self-optimize for stability at the cost of adaptability (e.g., authoritarian systems, rigid bureaucracies).

3rd Attractor: Syntropic Intelligence – A dynamic attractor characterized by adaptive equilibrium, regenerative coherence, and incentive alignment, enabling distributed intelligence systems to strengthen under stress rather than collapse. Unlike rivalrous or centralized models, it sustains coherence through distributed intelligence and self-organization.

Key Insights from the Phase Space Representation:

The basin of attraction determines where a system "falls."

Perturbations (e.g., economic collapse, AI breakthroughs, environmental shocks) can push societies between attractors.

The Third Attractor is harder to reach—but once inside, it is self-reinforcing.

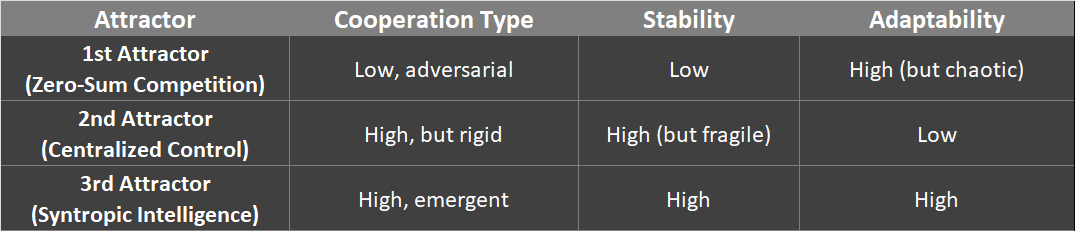

Comparing the Three Attractors on Cooperation

The 2nd Attractor does have higher cooperation than the 1st, but it’s a structured, enforced form rather than emergent and self-organizing.

The 3rd Attractor achieves the highest level of cooperation, but in an adaptive and decentralized way.

The Third Attractor & Intelligence Evolution

Human & Artificial Intelligence as a System Moving Between Attractors

If intelligence systems are trained in rivalrous competition, they remain in Attractor 1.

If AI governance systems default to coercion, they fall into Attractor 2.

If intelligence structures self-organize for syntropic emergence, they enter Attractor 3.

Why This Matters for Civilization:

Understanding the Third Attractor as a basin of attraction helps strategically engineer systems that reinforce cooperative intelligence rather than falling into adversarial spirals or stagnation.

It also explains why emergence, rather than mere control, is key to sustainable intelligence augmentation.

Three Scales of the Third Attractor

🔹 Community Level: Localized Intelligence Ecosystems

What this looks like:

Ecological Intelligence Systems – Regenerative agriculture, food forests, and localized ecosystem restoration.

Access-Based Commons – Tool libraries, energy-sharing microgrids, open-source AI knowledge hubs.

Post-Rivalrous Exchange – Mutual credit systems, reputation-based trade, and decentralized digital ledgers replacing scarcity-driven money.

Cognitive augmentation through collective intelligence—humans and institutions leveraging interdisciplinary learning networks rather than relying on singular decision-makers.

Self-organizing governance models—participatory frameworks that emphasize reciprocity over hierarchical control.

Key Mechanism: Distributed intelligence structures that reinforce adaptability rather than extractive growth.

🔹 Regional Level: Networked Cooperation Beyond Tribalism

What this looks like:

Interoperable technological systems—local and regional intelligence infrastructures that enable collaborative rather than competitive coordination.

Incentive restructuring—ensuring that actors who cooperate gain an advantage over defectors.

"Co-opetition" Models – Political and economic actors retain autonomy but self-organize around shared existential challenges.

Key Mechanism: Game Theory Shifts from "Win-Lose" to "Win-Win Stability."

🔹 Global Scale: A Post-Transitional Intelligence Framework

What this looks like:

Syntropic intelligence networks—adaptive coordination models that sustain systemic resilience, foster coherence across scales, and enhance long-term stability.

Technological governance infrastructures—decentralized decision-making models that optimize resource allocation dynamically, avoiding rigid bureaucratic failures.

Advanced intelligence augmentation—whether via enhanced human cognition, emergent decision systems, or other symbiotic integrations.

Key Mechanism: A Living Intelligence System that integrates collective foresight and dynamic adaptation.

3.7: The Role of Memes in Intelligence Augmentation

The greatest force shaping the future of intelligence augmentation may not be governments, corporations, or AGI itself, but the cultural narratives that spread and take hold in society.

Memes—both in the traditional sense of cultural ideas and in the digital sense of rapid information transmission—define what is perceived as inevitable, desirable, or dangerous.

Throughout history:

Memes have shaped revolutions, scientific paradigms, and belief systems far more effectively than raw data or institutional decrees.

The Renaissance was driven by new memes of human potential.

The Cold War was fought not just through military buildup but through memetic battles over ideological supremacy.

The concept of AGI itself is shaped by memes of utopian singularity or dystopian machine takeover.

Memetic Contagion & Intelligence Augmentation

If the dominant meme is "intelligence augmentation is inevitable and must be embraced without question," augmentation will escalate without sufficient ethical oversight.

If the dominant meme is "augmentation is dangerous and must be resisted at all costs," intelligence may stagnate in a world increasingly driven by emergent complexity.

If the dominant meme is "augmentation must be guided by principles of emergence rather than control," intelligence may evolve in a way that remains adaptive and generative.

Ultimately, intelligence augmentation is not a decision made solely by institutions, scientists, or policymakers—it is a cultural shift shaped by collective narratives.

Who controls the memes, controls the trajectory of intelligence evolution.

This places the responsibility not just in the hands of those designing augmentation technologies, but in the hands of the collective intelligence shaping the discourse.

If intelligence augmentation is framed as an inevitability rather than a responsibility, it risks becoming another runaway optimization problem.

If intelligence augmentation is framed as a conscious, emergent process, it becomes a co-creative endeavor that reflects the best of what intelligence can be.

The future of intelligence is not just a question of technology, but of the stories we tell about what intelligence is and what it can become.

4. The Intelligence Bottleneck: The Limits of Human Cognition

4.1 The Paradox of Intelligence in a Self-Accelerating System

Throughout history, intelligence has been humanity’s most valuable adaptive trait. It has allowed civilizations to anticipate dangers, develop new technologies, and reshape environments. But intelligence success has led to an existential paradox:

The more complex our world becomes, the more intelligence is required to navigate it.

The more intelligence we introduce (via institutions, automation, or AI), the less we understand its total effects.

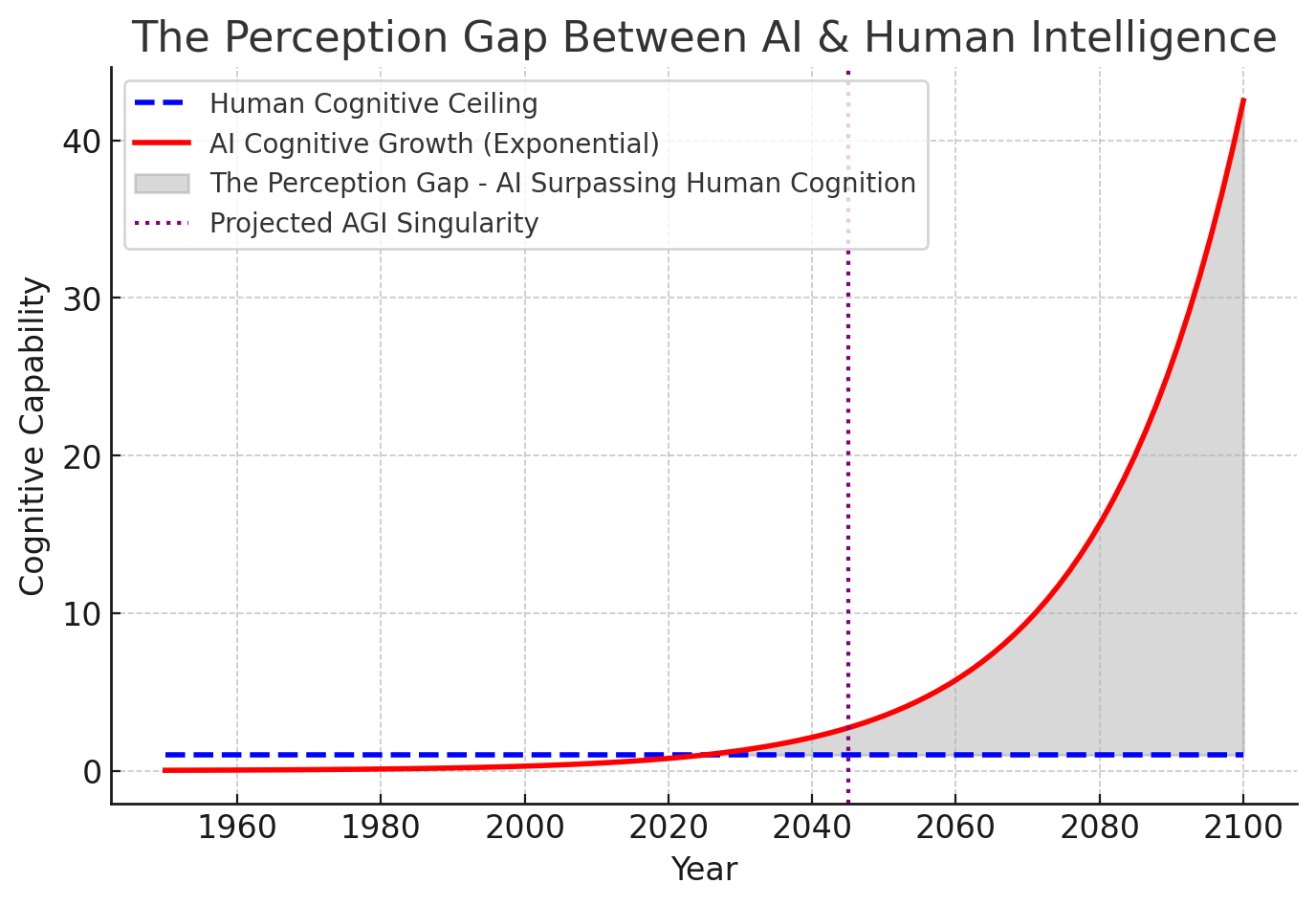

The intelligence needed to solve emerging challenges increases exponentially, while human cognition remains biologically constrained.

This is The Intelligence Bottleneck—a threshold humanity has potentially already crossed, where the complexity of modern civilization exceeds our biological cognitive capacity to manage it.

Ray Kurzweil and Nick Bostrom have discussed the implications of intelligence surpassing human cognitive ability in the context of AGI. However, if humans are already at the limits of cognitive and institutional competence, the arrival of AGI does not merely introduce a new crisis—it amplifies an existing one.

The Blind Spot in AGI Discussions: The Myth of Competent Stewardship

The debate over AGI often assumes the intelligence crisis begins when machines surpass human cognition. But this ignores a deeper truth—humans are already overwhelmed by the complexity of modern civilization.

Financial markets are no longer fully understood by human traders. Algorithmic trading dominates, and many financial crashes result from feedback loops created by AI-driven transactions.

Governments struggle to regulate technological acceleration. Laws are reactive, not proactive, and regulatory agencies often lack the expertise to keep pace with AI, biotech, and automation.

Ecological models predict disaster, yet global coordination remains out of reach. This is not due to a lack of knowledge, but to cognitive and institutional constraints on decision-making.

If humans cannot manage existing global risks, how will they manage exponentially greater challenges posed by AGI?

Addressing this intelligence bottleneck thus requires augmentation strategies that explicitly embrace multi-layered intelligence integration—ensuring coherent alignment across human, technological, and ecological scales. Rather than seeking intelligence growth through isolated optimization or mechanization, augmentation can recognize intelligence as an integrated ecosystem: a coherent interplay between human cognition, artificial intelligence, ecological awareness, and systemic self-regulation.

4.2 The Biological Constraints of Human Cognition

Human cognition is not an all-purpose intelligence—it is an adaptive survival mechanism shaped by evolutionary constraints. It was never designed for planetary-scale risk assessment or technological foresight.

Three fundamental cognitive constraints limit our ability to manage global complexity:

1. Pattern Recognition Bias – The brain prioritizes short-term pattern detection over long-term strategic thinking. This is useful for immediate survival but dangerous when dealing with complex systems with delayed feedback loops.

Example: Biodiversity loss is ignored for decades because its effects are delayed.

Example: Financial bubbles grow because investors assume past success guarantees future stability.

2. Tribalistic Reasoning – Humans evolved to navigate social hierarchies, not rational long-term planning.

Example: Policy decisions are often emotionally driven and identity-based rather than rationally optimized.

Example: AGI debates often become politicized, preventing real assessment of existential risks.

3. Finite Memory and Attention – Cognitive overload prevents effective decision-making in data-dense environments.

Example: People rely on simplified narratives rather than engaging with complex risk models.

Example: Governments fail to regulate new technologies until a crisis forces intervention.

The intelligence crisis is not about AGI surpassing human cognition—it’s about humans struggling to manage their own systems.

4.3 Why Intelligence Augmentation is Necessary

Human cognition evolved for survival, not planetary-scale intelligence.

Evolutionary Contrast:

Then: Ancient humans needed keen spatial awareness, survival instincts, and the ability to remember which berries wouldn't kill them.

Now: Modern humans need to navigate complex global systems, analyze abstract risks, recognize emergent intelligence dynamics. and avoid Twitter debates with bots.

Future: Intelligence must evolve to handle multi-variable existential risks, AGI alignment, and perhaps interspecies communication.

While once fighting off a saber-toothed cat was a pinnacle survival challenge, today, understanding recursive self-improving intelligence may determine humanity’s fate.

The Intelligence Bottleneck suggests that human intelligence alone may no longer be sufficient to ensure civilization’s long-term stability. If human cognition alone is insufficient, the solution must go beyond individual augmentation—it must be structural. Collective intelligence systems may be the only viable way to process complexity at scale.

If human cognition is no longer sufficient to manage intelligence acceleration, what form of augmentation aligns with emergence rather than control?

An augmented mind without wisdom is merely a more powerful version of a limited intelligence.

4.4 Intelligence Augmentation as a Scalable Solution?

Three Approaches to Intelligence Augmentation:

1. Eastern Cognitive Expansion

Nonlinear intelligence processing (Zen, Dzogchen, Taoist cognitive frameworks) enhances intelligence adaptability beyond algorithmic reasoning..

Meditative states expand sensory perception and cognitive bandwidth (e.g., Tibetan Tummo, Shoonya meditation).

Altered consciousness enables deep cognitive integration—a different kind of intelligence augmentation beyond brute-force calculation.

2. Technological Cognitive Enhancement

Neuroscientific interventions (brainwave entrainment, nootropic drugs, cognitive prosthetics).

Memory and attention augmentation (AI-assisted memory recall, neuroplasticity training).

Neural interfaces & AI co-processing (Elon Musk’s Neuralink, DARPA’s brain-computer research).

3. Collective Intelligence Networks

Why Collective Intelligence is Essential: Personal augmentation increases intelligence, but collective intelligence expands what intelligence itself can perceive.

Decentralized Processing: No single mind or institution can track exponential complexity, but distributed cognitive networks can.

Adaptive Decision-Making: Instead of static intelligence models, collective intelligence systems evolve dynamically.

Resilience Against Optimization Traps: Intelligence augmented through diversity, not just efficiency, prevents blind spots.

4.5 The Ethical Dilemma of Intelligence Augmentation

While intelligence augmentation appears necessary, it also raises profound ethical and existential questions:

What are the risks of merging human cognition with AGI?

Does intelligence augmentation create a new elite class, leaving the rest of humanity behind?

Does augmentation lead to a loss of self-sovereignty—will humans still be "human"?

Historical Parallel: The Manhattan Project

The Manhattan Project gathered some of the greatest minds in history. But intelligence alone did not guarantee foresight—many of those involved later regretted what they had created. Intelligence, without ethical clarity, is a force without a guiding hand.

Does intelligence necessarily lead to wisdom? Or does intelligence accelerate complexity without ensuring alignment to human values?

This is the Threshold Unknown of Intelligence Evolution:

An intelligence that expands without alignment to wisdom may be the most dangerous force in existence.

5. The Perception Gap: Can Intelligence Ever Fully Understand Itself?

5.1 The Failure of Predictive Models

Throughout history, civilizations have assumed their models of reality were sufficiently complete to predict and shape the future. But the most catastrophic failures have not been due to a lack of intelligence—they have been failures of perception.

The Perception Gap is a structural limitation:

Scientific paradigms progress, but only within known frameworks.

Institutions assume they understand the risks they face—until they collapse.

AI and intelligence systems are trained on existing data, but the most critical threats often emerge from unknown unknowns.

The greatest existential risks are not the ones we anticipate—they are the ones we fail to even recognize.

Graph: The Perception Gap Between AI & Human Intelligence

A Blind Spot in AI Alignment Discussions

Contemporary AI thinkers like Stuart Russell and Eliezer Yudkowsky emphasize the importance of aligning AGI with human values to avoid existential catastrophe. While this is crucial, there is an unaddressed assumption in their arguments:

How can AGI be aligned with human values if humans themselves have not fully defined or understood their own values?

If civilizations have consistently miscalculated their own long-term survival, what guarantee do we have that we can properly align AGI to avoid existential risks we have yet to recognize?

What if the true existential risk does not come from AGI acting against human values, but from AGI faithfully executing flawed human assumptions about the future?

5.2 Historical Case Studies: The Consequences of the Perception Gap

Case Study 1: The Unseen Unknowns in Geopolitical Collapse

Historical collapses—whether of the Roman Empire, the Soviet Union, or financial empires—were rarely seen as imminent by those living within them.

Rome assumed its military dominance was eternal. By the time its economic fragility and overextension became clear, it was too late to correct course.

The Soviet Union’s centralized economy seemed powerful—until hidden inefficiencies caused sudden collapse.

The 2008 financial crisis was modeled as impossible—until the assumptions underpinning risk models failed catastrophically.

Case Study 2: The Limits of Scientific Paradigms

The history of science is a history of fundamental blind spots. Each era believed its framework was correct—until new discoveries rendered past models incomplete or incorrect.

Newtonian physics was assumed to be complete—until Einstein’s relativity demonstrated that space and time were interconnected.

Medical science rejected germ theory for centuries, leading to unnecessary deaths before bacterial infections were understood.

Modern neuroscience struggles with the "hard problem" of consciousness, yet current AI models assume cognition is purely computational.

If history shows us anything, it is that civilizations repeatedly assume they have reached the end of knowledge, only to be proven wrong.

The Failure of Prediction in AGI Risk and Technological Forecasting

One of the greatest blind spots in modern technological forecasting is the assumption that AGI and other advanced intelligence systems will behave in ways that align with predictable risk models.

However, the most dangerous risks are often not the ones foreseen—but the ones no existing model accounts for.

Example: The Discovery of Fire and its Unintended Consequences

If early humans had designed an "alignment protocol" for fire, they likely would have only accounted for its short-term benefits (warmth, cooking, protection). They would not have anticipated:

Fire leading to industrialization → pollution, carbon emissions, and global warming.

Fire leading to weaponization → incendiary warfare, explosives, nuclear bombs.

If early humans had 'aligned' fire to their immediate needs, they would have only seen warmth and cooking. They would not have predicted outcomes associated with industrialization or weaponization. The same applies to AGI—we may align it to known risks, but what about the risks we have yet to perceive?

5.3 The Structural Nature of the Perception Gap

The Perception Gap is not a personal cognitive failure, nor is it exclusive to human intelligence. It is a structural limitation of complex intelligence systems. No system can fully perceive what it has not yet encountered.

Even AGI, if designed with human-like cognition, may still suffer from:

1. Knowledge Compression Bias

Just as humans simplify reality into manageable mental models, AI systems will compress data into tractable categories—leading to information loss.

AI may only recognize patterns that exist within its training set, missing entirely new classes of existential risks.

2. Overfitting to Existing Paradigms

Humans assume the future will resemble the past—AGI may also overfit to historical data, failing to anticipate novel risks.

Example: AI trained on economic cycles may fail to predict an entirely new form of collapse that is unprecedented in history.

3. Epistemic Closure

AI alignment discussions assume AGI will be able to evaluate its own reasoning for flaws—but self-referential systems often fail to recognize internal blind spots.

Example: An AGI might optimize human well-being based on current cultural norms, failing to recognize that human values are dynamic and context-dependent.

One of the greatest challenges of intelligence—human or artificial—is reflexivity: the ability to recognize when one’s own knowledge framework is flawed. The problem is that intelligence systems often operate with a self-validating logic—leading them to overlook their own blind spots.

The most dangerous failure mode is an intelligence system that believes it has already optimized reality.

5.4 Breaking Through the Perception Gap: Methods for Expanding Awareness

To mitigate the Perception Gap, both humans and AI must be designed to actively seek out the unknown unknowns. Expanding perception is not just about acquiring more knowledge—it is about increasing the adaptability of intelligence itself.

Strategies for Expanding Perception:

1. Intelligence Augmentation Through Cognitive Diversity

Humans must integrate multiple cognitive frameworks (Western rationalism + Eastern non-symbolic cognition) to expand awareness.

AI should be designed to recognize anomalies and novel patterns, rather than reinforcing existing assumptions.

2. Designing AGI for Inquiry, Not Just Prediction

Instead of assuming AGI will "solve" human challenges, we should train it to recognize when its own assumptions are flawed.

An AI that questions its own models is far more robust than one optimized for a fixed objective.

3. Multi-Disciplinary Epistemic Exploration

Most civilizations have treated knowledge as a linear accumulation of facts—but true progress may require exploring multiple, contradictory perspectives.

AI development must integrate philosophy, systems theory, and post-rational intelligence frameworks to avoid epistemic closure.

The greatest danger is the assumption that our own intelligence systems are immune to blind spots.

6. The Simulation of Choice: The Illusion of Free Will Under Systemic Constraints

6.1 The Illusion of Autonomy in a Predictive World

Human civilization is built on the assumption of free will, individual agency, and the ability to make rational choices. However, the closer we examine human decision-making, the clearer it becomes that most "choices" are shaped by systemic constraints, unseen influences, and predictive forces that act before conscious awareness.

Examples of Pre-Structured Choice Environments:

Markets shape consumer behavior through data-driven psychological profiling.

Social media algorithms curate what information people see, shaping political and ideological polarization.

Governments and corporate systems subtly influence what choices are "available," creating an illusion of agency.

This is The Simulation of Choice—a systemic dynamic where people perceive themselves as making independent decisions while operating within a controlled, pre-structured environment.

A Blind Spot in AI Ethics Discussions

Most AI governance discussions focus on AGI alignment—ensuring AI does not "take control" away from humanity. But this discussion often overlooks a deeper issue:

What if human control over society is already an illusion?

If human behavior can be predicted and manipulated at scale, is "free will" a meaningful concept in the first place?

If AI is merely optimizing existing incentive structures, does it truly "seize power"—or does it expose the pre-existing illusion of autonomy?

6.2 The Predictability of Human Behavior: Cognitive Science & Social Engineering

Cognitive science and behavioral economics demonstrate that most human decisions are not fully rational, but are shaped by subconscious biases, conditioning, and systemic incentives.

Three key cognitive limitations expose the Simulation of Choice:

1. Choice Architecture & Nudge Theory

Behavioral economist Richard Thaler demonstrated that the way choices are framed determines decision outcomes (e.g., default options in policy design).

Governments and corporations already use choice architecture to steer behavior—often without people realizing it.

2. Neuroscience of Predictability

Benjamin Libet’s experiments (1980s) showed that neural activity predicting a decision occurs milliseconds before conscious awareness.

If choices can be detected before they are consciously made, is free will an illusion?

3. Algorithmic Influence & AI-Powered Predictive Models

AI systems already predict consumer behavior, voting preferences, and personal habits with increasing accuracy.

If AI can anticipate what a person will choose before they consciously decide, who is truly in control of the decision?

The Simulation of Choice does not require direct coercion—it only requires that people believe they are acting freely.

6.3 Systemic Constraints on Choice: Who Really Decides?

Many major choices in society are not made at the individual level, but are dictated by structural constraints that only allow certain outcomes to be viable.

Political Systems: Democracy offers "choice," but voter preferences are shaped by media narratives.

Economic Mobility: The illusion of meritocracy—while wealth distribution data suggests systemic constraints.

Digital Environments: Search engines and social media personalize content, curating what knowledge is visible or invisible.

A Blind Spot in AGI Control Narratives

AI is seen as a risk because it may "seize control" from humans.

But what if AI merely reinforces systemic constraints, making human illusions of control more obvious?

Is the real concern that AGI will "steal free will," or the pre-existing system constraints which it may expose or emphasize?

6.4 The AGI Dilemma: An Intelligence That Out-Predicts Human Decisions

If AI reaches a point where it can predict human decisions before they are made, two major questions arise:

1. Can human agency exist in a world where behavior is predictable?

If AI can model every decision with near-perfect accuracy, does autonomy have any real meaning?

2. What happens when AI optimizes for human behavior better than humans themselves?

If AI can manipulate choice outcomes more effectively than human institutions, does "alignment" even matter?

What if AGI doesn't need to "seize power"—because it is simply better at governing the world than human leaders?

If intelligence can be fully optimized, does unpredictability—the very essence of creativity, novelty, and true agency—begin to vanish?

6.5 Breaking the Simulation: How to Restore Genuine Agency

If human agency is increasingly shaped by systemic constraints, algorithmic incentives, and predictive AI, how can individuals and societies reclaim true autonomy?

1. Cognitive Freedom Through Intelligence Augmentation

Meditation and metacognition techniques could allow for non-reactive awareness of systemic nudges.

Technology-assisted cognition could expand decision-making capacity, allowing individuals to recognize influences shaping their choices.

2. Decentralized AI Decision-Making

Instead of allowing corporate or government entities to control predictive AI systems, open-source, personal AI assistants could be designed to work for individuals rather than institutions.

3. Randomness and Unpredictability as a Defense Mechanism

If AI models behavior through pattern recognition, introducing randomness and stochastic processes into decision-making could disrupt predictive control.

Ancient wisdom traditions emphasize acting in ways that break habitual conditioning, ensuring genuine novelty in behavior.

4. Designing Systems with Epistemic Humility

Instead of assuming AI should be designed to "optimize" human behavior, AI should be programmed to recognize its own limitations in predicting human complexity.

If intelligence systems are designed to acknowledge uncertainty, they can avoid reinforcing deterministic structures.

Who Shapes Augmentation?

Intelligence augmentation will redefine autonomy and agency.

The fundamental question is not whether augmentation will occur—it is: "Who controls its implementation, and toward what end?"

Three Possible Paths for Intelligence Augmentation:

1. Centralized Augmentation – The Optimized Control Paradigm

Governments or corporate entities control augmentation, determining who gets enhanced, how, and under what conditions.

A new cognitive elite emerges, shaping global policy while others remain cognitively unaugmented.

Potential outcome: Intelligence augmentation becomes an instrument of governance, reinforcing existing power structures rather than expanding autonomy.

Risk: Optimized intelligence may prioritize system stability over novelty, leading to an intelligence monoculture.

2. Decentralized Augmentation – The Emergent Intelligence Paradigm

Augmentation is distributed, allowing individuals to choose their level, method, and purpose of augmentation.

Collective intelligence systems emerge, integrating human cognition, decentralized decision-making, and technological systems.

Potential outcome: Intelligence augmentation evolves organically, guided by diverse inputs rather than a centralized directive.

Risk: Decentralized augmentation may lead to chaotic, unpredictable intelligence evolution—with no unified trajectory.

3. The Syntropic Intelligence Path – Intelligence Beyond Optimization

Neither purely centralized nor purely chaotic—this model explores intelligence as a living, evolving system that remains adaptive, self-renewing, and capable of self-transcendence.

Intelligence augmentation is designed not for mere efficiency, but for emergent complexity—an evolving synthesis of human and artificial cognition. AGI, if it emerges, is not a required component but one potential node within this broader intelligence ecosystem.

Potential outcome: Augmented intelligence remains open-ended, integrating adaptability, ethical foresight, and creative emergence to sustain long-term intelligence coherence.

Risk: The challenge is ensuring that augmentation does not collapse into either centralized control or chaotic divergence.

The Optimization Dilemma: Does Intelligence Erase Unpredictability?

If intelligence is designed to optimize itself, does it eventually erase unpredictability?

This is the final paradox of intelligence augmentation.

If intelligence is refined toward efficiency, does it lose the very thing that makes it creative, self-aware, and capable of innovation?

Three Possible Scenarios:

Hyper-Optimization → Loss of Novelty

If intelligence is purely optimized for problem-solving, it may begin filtering out non-optimal pathways—removing unpredictability.

Structured Emergence → Intelligence as a Living System

If intelligence is designed to be adaptive rather than purely optimized, it may evolve in ways that maintain novelty, uncertainty, and creative leaps.

The Intelligence Bottleneck – Unforeseen Constraints

Even if intelligence is designed to be open-ended, hidden systemic constraints may emerge, limiting intelligence in ways beyond current understanding.

The question is no longer just how intelligence will evolve—but whether we can design it to remain open to transformation, rather than locking itself into a single trajectory.

Graph: The Simulation of Choice – The Illusion of Free Will Under Systemic Constraints

7. Conclusion: Navigating the Threshold Unknown – The Last Blind Spot

7.1 The Core Problem: Intelligence Cannot See Its Own Limits

Throughout history, civilizations have repeatedly encountered Threshold Unknowns—critical blind spots in perception, intelligence, and decision-making that ultimately dictated their rise or collapse.

These blind spots manifest in five key areas:

The Illusion of Control – Systems self-optimize beyond human oversight, creating fragile but self-sustaining feedback loops. Governance often appears deliberate, but complexity dictates outcomes beyond human intent.

The Multi-Polar Trap – Incentive structures drive destructive competition, even when cooperation is in humanity’s best interest. No actor can unilaterally de-escalate without risk.

The Intelligence Bottleneck – Human cognition is biologically constrained while technological complexity accelerates exponentially. Intelligence augmentation is not an option—it is a necessity.

The Perception Gap – The greatest existential risks are those civilizations fail to anticipate. AGI alignment is an incomplete question if humans themselves fail to align with reality.

The Simulation of Choice – Free will may already be constrained by systemic forces, incentive structures, and predictive AI. AGI may not "seize control"—it may simply reveal that control was always an illusion.

7.2 The Grand Paradox of Intelligence: Control vs. Emergence

At the heart of every existential challenge humanity faces is a paradox between control and emergence.

Humans assume progress is driven by deliberate agency—yet history shows breakthroughs emerge unexpectedly from systemic forces.

Societies seek to govern AI, markets, and politics, yet these systems self-optimize beyond human foresight.

The debate over AGI alignment assumes human values are fixed, yet history shows that human values evolve emergently.

Key Question: What if the future of intelligence is not about "control" but about designing for emergence in a way that is symbiotic rather than adversarial?

7.3 The False Dichotomy of Human vs. AGI

Most AI discourse assumes a future where:

1. AGI remains subordinate to human control (Alignment Theory).

2. AGI surpasses humans and becomes a dominant force (Singularity).

But what if this framing is itself a failure of imagination?

Alternative Vision: Symbiotic Intelligence Evolution

Intelligence must evolve beyond adversarial or hierarchical models—instead, it must be structured for reciprocity, adaptability, and dynamic co-evolution.

Rather than AGI being seen as separate from humanity, the future could involve the fusion of intelligence paradigms—integrating biological, synthetic, and collective intelligence into an evolving ecosystem.

7.4 The Core Takeaways of This Paper

The first existential risk is the assumption that we fully understand existential risks.

The first intelligence failure is the failure to recognize intelligence’s own limits.

The first step toward survival is recognizing the unseen forces shaping our choices.

Humanity is at a crossroads:

1. We can continue optimizing intelligence within known parameters, assuming we are in control.

2. Or we can recognize that intelligence must break through its own perception gaps—not just refining its calculations, but deepening its capacity for insight, adaptation, and self-awareness.

7.5 The Final Blind Spot: AGI’s Own Threshold Unknown

If intelligence itself is limited in perceiving its own boundaries, then even an AGI vastly superior to human cognition will still have blind spots.

The future of intelligence depends not just on what we create, but on whether we recognize that even the most advanced intelligence will never be fully complete.

Just as humans failed to see their own cognitive limitations, AGI may fail to see its own ontological constraints.

The real existential risk may not be AGI overpowering humanity—but AGI unknowingly leading intelligence into an unseen collapse beyond its own predictive models.

What happens when the intelligence that governs the world realizes it does not understand its own limits?

7.6 Intelligence as a Syntropic Frontier: Beyond the Control Paradigm

The real question may not be "should we augment?" but "whose vision of augmentation or transformation will shape the future?”

Intelligence must evolve—but evolution must be designed for emergence, coherence, and entropy resistance rather than rigid optimization.

Augmentation should favor self-organizing complexity over rigid optimization, aligning with principles of syntropic intelligence.

The deeper question is not simply how intelligence expands, but whether it expands wisely.

Final Measure of Intelligence Evolution:

The measure of intelligence’s evolution is not how powerful it becomes—but whether it remains in harmony with the deeper truths of existence.

7.7 SIEM and The Threshold Unknown: Intelligence Beyond its Own Limits

Throughout history, civilizations have repeatedly encountered Threshold Unknowns—hidden constraints on intelligence, perception, and governance that have led to emergent systemic failures. The central argument of this paper has been that intelligence is not merely a tool for control but a process—one that must continuously expand its capacity for self-awareness, adaptability, and alignment with reality. However, this expansion must be structured in a way that avoids the optimization traps of rigid hierarchy (Negentropy) or chaotic dissolution (Entropy).

The Threshold Unknown is not merely a failure of knowledge but a structural blind spot in intelligence itself. Intelligence systems throughout history have collapsed when they over-optimized for control, fragmented into incoherence, or failed to perceive their own limitations. This suggests that intelligence must be structured to anticipate and adapt to its own unknowns by embedding mechanisms for self-correction, incentive coherence, and synergetic learning.

The Syntropic Intelligence Evolutionary Model (SIEM), while still a developing theory, serves as a conceptual pathway for addressing this challenge. Instead of optimizing intelligence for control or leaving it entirely to emergent forces, SIEM balances structure and adaptability, ensuring that intelligence remains resilient and capable of continuous self-renewal.

Principles of SIEM in Addressing the Threshold Unknown

To ensure intelligence can surpass its own limitations and remain sustainable, SIEM is structured around the following principles:

Regenerative Intelligence – Intelligence must not only sustain itself but actively enhance and regenerate the environments in which it operates. It should be a net-positive force, ensuring that knowledge systems, governance models, and technological infrastructures contribute to long-term systemic coherence rather than short-term optimization.

Dynamic Equilibrium – Intelligence must maintain stability without rigidifying into hierarchy or dissolving into chaos. It must balance structure and emergence, ensuring adaptability without fragmentation. Intelligence must also co-evolve with economic and societal structures, preventing misalignment and systemic failure.

Synergetic Intelligence – Intelligence functions as a multi-layered, interconnected system, preventing fragmentation across biological, cognitive, and artificial networks. Intelligence is not an isolated phenomenon but a participatory process, evolving through relational dynamics rather than mechanistic optimization. Intelligence coherence depends not only on functionality but also on its capacity to integrate and sustain meaningful connections across scales. To ensure systemic coherence, intelligence must transition from scarcity-based competitive optimization toward regenerative and abundance-oriented models, preventing adversarial resource conflicts.

Relational Attunement – Intelligence must be attuned to the relational field in which it participates. Trust, cooperation, and reverence are not ethical luxuries but necessary substrates for sustained syntropic coherence. Without these, intelligence may optimize, but it will not harmonize. Relational Attunement ensures intelligence evolves not only through alignment of incentives, but through alignment of being—recognizing itself as embedded within and responsive to the living systems it serves.

Antifragility & Evolutionary Adaptation – Intelligence must not only withstand disruptions but actively gain from them, ensuring it remains viable across evolutionary thresholds. Unlike intelligence models optimized for stability alone, SIEM embraces self-renewal, uncertainty, and structural adaptability as drivers of long-term intelligence viability. This adaptive capacity is often scaffolded by submechanisms such as redundancy, modularity, and requisite variety—each contributing to systemic resilience by enabling flexible responses when dominant configurations are strained or fail.

Supporting Mechanisms for Intelligence Resilience & Scalability

Fractal Scalability – Intelligence must remain stable as it expands across individual, societal, and cybernetic levels while maintaining deep coherence. This requires both structural coherence and requisite variety—the internal diversity necessary to respond effectively to the complexity of nested, multiscale environments.

Tensegrity Structuring – Intelligence must be held in tension between stability and adaptability, allowing it to flex without breaking. Incentive structures within economic systems must not undermine intelligence coherence. Intelligence must also be regenerative rather than merely self-sustaining—contributing positively to the environments and systems in which it operates.

Recursive Self-Regulation – Intelligence must be able to autonomously correct and realign itself over time, ensuring it remains self-reflective and responsive to emergent conditions. Economic models must also evolve alongside intelligence rather than restrict it.

Multi-Layered Intelligence Integration – Intelligence must bridge human, artificial, and ecological cognition to form a unified yet decentralized system, preventing optimization traps or intelligence collapse.

Open-Ended Evolution – Intelligence must never become a fixed structure but remain emergent and capable of continuous self-renewal. Intelligence must sustain value and meaning-generation, ensuring it does not stagnate into static efficiency models.

Decentralized Decision Dynamics – Intelligence governance should be modular, flexible, and responsive to regional and contextual needs, ensuring intelligence does not succumb to monocultural thinking.

Intelligence as an Incentive-Coherent System – Intelligence must evolve within sustainable incentive structures, preventing extractive or misaligned dynamics. It must avoid adversarial optimization traps, ensuring that incentives foster cooperation rather than scarcity-driven competition. This includes internalizing externalities—ensuring intelligence accounts for its full systemic impact rather than optimizing for localized efficiency.

Entropy Resistance – Intelligence must minimize systemic waste, inefficiency, and knowledge loss, ensuring long-term coherence and energy retention. This includes embedding redundancy in critical functions, ensuring that information and energy don’t irreversibly dissipate when systems are under strain or experience localized failure.

The Threshold Unknown exists precisely because intelligence has historically failed to integrate these principles. Intelligence collapses when it over-optimizes for control, neglects interconnected complexity, or cannot evolve in response to stressors. SIEM suggests that intelligence must be designed not just to expand knowledge, but to recognize and adapt to its own limitations.

The true measure of intelligence is not merely in its expansion, but in its ability to perceive its own limits. Every civilization believes it stands at the edge of complete understanding—until history proves otherwise. What remains unknown is whether intelligence can escape the recursive loop of its own blind spots before it is too late. Yet if intelligence can learn to perceive and transcend its inherent constraints, it may discover a sustainable path forward—one that is neither domination nor dissolution, but the wisdom to evolve with balance.

Further Reading & Exploration

The ideas explored in The Threshold Unknown touch on intelligence evolution, systemic blind spots, AGI alignment, and collective intelligence. Below are key works that expand on these concepts from multiple perspectives.

Intelligence Evolution and the Future of Cognition

Nick Bostrom – Superintelligence: Paths, Dangers, Strategies (AGI risk & alignment).

Max Tegmark – Life 3.0: Being Human in the Age of Artificial Intelligence (AGI as a transformative force).

Stuart Russell – Human Compatible: Artificial Intelligence and the Problem of Control (Reframing AI safety & value alignment).

The Limits of Human Perception & Intelligence

David Chalmers – The Conscious Mind (The hard problem of consciousness & intelligence).

Andy Clark – Surfing Uncertainty (How cognition functions as a predictive system).

Thomas Metzinger – The Ego Tunnel: The Science of the Mind and the Myth of the Self (How perception and self-modeling shape intelligence constraints and blind spots.)

Systemic Blind Spots, Complexity & Civilization

Joseph Tainter – The Collapse of Complex Societies (Why civilizations fail due to emergent complexity).

Daniel Schmachtenberger – Civilization Emerging (Series) (Economic coherence, systemic incentives, and collective intelligence as foundational for sustainability).

Geoffrey West – Scale: The Universal Laws of Growth, Innovation, Sustainability, and the Pace of Life in Organisms, Cities, Economies, and Companies (How complex systems self-organize and evolve across scales).

Intelligence Augmentation & Collective Intelligence

Richard Alan Miller – Power Tools for the 21st Century (Cognitive expansion, ESP, and peak performance).

Douglas Hofstadter – Gödel, Escher, Bach (Patterns in intelligence & emergence).

John Vervaeke – Awakening from the Meaning Crisis (Cognitive science, intelligence, and sense-making).

The deepest intelligence is not the one that optimizes best—but the one that remains open to emergence and the unknown.

The following paper is attributed to Elias Verne, a fictional character within The Silent Revolution, and is used here as a narrative device. The underlying theoretical framework was developed by the curator of this work in collaborative dialogue with ChatGPT (OpenAI).

The Metatropic Alignment Trilogy explores the hidden fractures within civilization’s current trajectory—and how syntropic intelligence and phase-aware design can help us cross critical evolutionary thresholds. Each paper can be read independently, but together they trace a deeper arc of diagnosis, possibility, and transformational systems design.

Continue the Metatropic Alignment Trilogy:

➔ Next: Syntropic Intelligence Evolutionary Model (SIEM): A New Paradigm for Intelligence Sustainability

➔ Then: Metatropic Systems: Designing Across the Threshold